Abstract

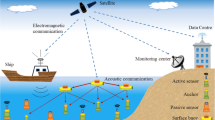

Many of the socio-economic and environmental challenges of the 21st century like the growing energy and food demand, rising sea levels and temperatures put stress on marine ecosystems and coastal populations. This requires a significant strengthening of our monitoring capacities for processes in the water column, at the seafloor and in the subsurface. However, present-day seafloor instruments and the required infrastructure to operate these are expensive and inaccessible. We envision a future Internet of Underwater Things, composed of small and cheap but intelligent underwater nodes. Each node will be equipped with sensing, communication, and computing capabilities. Building on distributed event detection and cross-domain data fusion, such an Internet of Underwater Things will enable new applications. In this paper, we argue that to make this vision a reality, we need new methodologies for resource-efficient and distributed cross-domain data fusion. Resource-efficient, distributed neural networks will serve as data-analytics pipelines to derive highly aggregated patterns of interest from raw data. These will serve as (1) a common base in time and space for fusion of heterogeneous data, and (2) be sufficiently small to be transmitted efficiently in resource-constrained settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Growing populations, a rising resource demand and the necessity to decarbonize energy production lead to a growing commercial utilization of the seafloor. In times of rising sea level and severe weather events, this increases the vulnerability of coastal populations and infrastructure. Against this background, the United Nations (UN) announced the “Decade of ocean science for sustainable development” to develop strategies to mitigate the impact of climate change, reduce ocean pollution, secure marine ecosystems and facilitate a sustainable economic utilization of the ocean [1]. Most challenges of a sustainable utilization of the ocean require a significant expansion of monitoring capacities, which are currently almost entirely limited to specialized companies and a few research institutes worldwide. Therefore, the UN is calling for Small Island Developing States to be given global access to ocean science instrumentation and expertise [1].

Conventional ocean technology, however, is expensive and requires highly trained specialists and complex onshore and offshore infrastructure to operate. The vast majority of current seafloor instruments are only passively recording sensor data, and data transfer only occurs after recovery of the instruments or via inefficient acoustic communication. Therefore, it is necessary to develop a new class of ocean technology that makes use of the technological advances in electronics and computing and which is affordable, accessible and open. The reduction of energy demand and size of electronic components allows a significant reduction of the required pressure-sealed volume, which is a main driver of costs, comparable to the advances of reducing the required payload in space technology. Reducing the weight of seafloor instruments from hundreds to a few kilograms allows these systems to be operated with smaller (and thus more cost-efficient) ships and significantly reduces the costs for shipping these instruments to remote regions. At the same time, there have been major advances in Artificial Intelligence (AI), fueling the development of smart sensors, and networks (Internet of Things [IoT]) have revolutionized many aspects of our daily lives. We argue in this paper that it is time to bring these advances to the seafloor via an Internet of Underwater Things (IoUT).

Potential of smart seafloor sensor network applications

Shoreline-crossing monitoring of submarine volcanoes

The 2018 volcanic sector collapse of Anak Krakatau (Indonesia) and the 2022 explosive eruption of the volcano Hunga Tonga-Hunga Ha’apai (Tonga) triggered tsunamis that caused severe damage to nearby coastal settlements [2, 3]. Most coastal regions surrounding the Pacific and Indian Oceans are equipped with early-warning systems for tsunamis triggered by major earthquakes. However, neither submarine volcanic eruptions nor the associated tsunamis are part of monitoring and early warning systems, leaving coastal societies unprepared. Unlike earthquakes, volcanic eruptions and landslides are generally preceded by a wide range of precursory signs ranging from deformation, heat and gas emissions or seismicity, which in the case of onshore volcanoes can be monitored on-site or by remote sensing. Satellite-based remote sensing has become one of the most important tools in monitoring (especially remote) volcanoes [4] and not only allows monitoring the course of an eruption, but also precursory processes like gas and ash emissions or deformation using Interferometric Synthetic Aperture Radar (InSar) data seen for the 2018 Anak Kraktau eruption [5, 6] or the 2021 Cubre Vieja (La Palma, Canary Islands) eruptions [7]. However, some crucial volcanological measurements like seismicity or the analysis of gas compositions can only be done with ground-based sensors, while the submerged part of marine volcanoes cannot be monitored by satellite at all. To achieve a shoreline-crossing monitoring of marine volcanoes, it is necessary to combine both area-wide measurements using remote sensing techniques with point measurements using stationary seafloor sensors (lander). Monitoring with remote sensing often has large temporal gaps in the coverage of a specific target, while point measurements often lack sufficient coverage to unambiguously detect processes. However, the shortcomings of each measurement approach can be effectively compensated by the other, but requires the cross-domain fusion of complex data streams (Fig. 1). Concepts to transfer data between the marine and the subaerial parts of integrated networks can be realized with buoys or autonomous underwater vehicles acting as relays [8]. The main aim of volcano monitoring is to detect potential hazards, and thus information from the submarine domain needs to be transferred onshore. This requires communications strategies that reduce the amount of transferred data either in predefined intervals or when changes in the system occur, which requires robust pattern recognition on-device or within the underwater network.

a Sketch of a distributed submarine network on the flanks of Stromboli (Italy). Submarine data from the EMODnet initiative and onshore topography from the Shuttle Radar Topography Mission. b Cross Domain Fusion between Cloud-based Remote Sensing Data (Domain 2), i.e., images, and a distributed, Underwater Sensor Network (Domain 1) of time-series data

Monitoring of sub-seafloor storage of CO2

Carbon Capture and Storage (CCS) is one of the key technologies for mitigating future CO2 emissions, and its industrial-scale implementation is part of every mission reduction path to limit global warming to 2 or even 1.5 °C defined by the International Panel of Climate Change in their 2018 special report [9]. The most promising geological storage in Europe is represented by exploited hydrocarbon reservoirs and marine aquifers. Marine aquifers in the Norwegian sector of the North Sea alone have a storage capacity of 70 gigatons [10], and these are the storage formation of the first and at the same time only actively operating submarine CCS as site Sleipner, where CO2 is injected in the sandstones of the Utsira Formation ~ 900 m below seafloor [11]. Peak CO2 injection rates were 1 Mt per year, which is only a fraction of the up to 10 Gt per year required in the IPCC to achieve the 1.5 °C path [9]. Extending injection rates towards a degree sufficient to contribute to global CO2 emission budget requires the implementation of novel, cost-efficient monitoring strategies since current reservoir performance and leakage detection monitoring builds on three-dimensional (3D) seismic surveying, which can only be repeated in multi-year intervals and are difficult to scale. However, cost-efficient fiber-optic cable and seafloor lander-based monitoring techniques are currently in development [12]. These allow continuous monitoring of seafloor deformation as proxy for fluid migration in the underground, but require repeated ground truthing using seismic data and need to be calibrated by geomechanical and fluid flow simulations [12]. These data domains are highly uncorrelated and require cross-domain fusion to enable actual data fusion and mining. Further, the limited communication links of the devices practically enforce on-device analytics to reduce the need for communication. Monitoring strategies for CO2 storage sites needs to be able to detect long-term changes (e.g., increase of tilt or uplift over time) as well as sudden events indicating potential problems in the integrity of the storage formation (e.g., shallow seismicity indicating hydrofracturing or changes in the water chemistry). In the following, we discuss how intelligent Underwater Sensor Networks can address such challenges.

Research challenges

Underwater communication

Wireless broadband communication is an important cornerstone of modern network computing and is not possible underwater since high-frequency electromagnetic waves are absorbed by seawater due to its high electrical conductivity. Therefore, underwater communication is generally limited to acoustic communication, which operates at frequencies of between 10 and 400 kHz with data transfer rates of 10s of kbit/s over some hundred meters. Present-day 5G mobile communication has data transfer rates, which are about a million times faster using frequencies between 24 and 54 GHz, enabling 10s of Gbit/s over several kilometers. Acoustic waves (speed of sound of water: ~ 1,500 m/s) travel with velocities a million times slower than electromagnetic waves (speed of light: ~3 × 108 m/s), adding a significant latency to acoustic communication [13]. Adding to these limitations, acoustic broadcasting has high energy consumption and thus requires high battery capacities, which limit the size and cost reduction potential of underwater network nodes for IoUT applications [13].

Large volumes of data on constrained devices

To benefit from the advances of reducing the required dimensions for seafloor devices and at the same time establishing communication within underwater networks, the required amount of transferred data within wireless underwater networks needs to be minimized. Underwater sensors record large amounts of data, while having only limited communication capabilities. A hydrophone, for example, samples at 4 kHz with 24 bits, resulting in a data rate of 12 kBit/s. While this data rate can easily be handled by today’s cellular technologies such as 4G and 5G, underwater communication is limited to a few bits per second due to the above-mentioned challenge. Moreover, underwater communication is highly energy intense [13].

Heterogeneous data

Sensor nodes on the seafloor collect multivariate time-series data: Each node can be equipped with a vast sensor array including hydrophone, accelerometer, gyroscope, pressure, temperature sensors. Often operating as continuous, long-term deployments, sensors provide data at high resolution in time and for a long duration. However, due to its limited scope, a sensor only covers a limited geographic space. Remote sensing, in contrast, covers a vast geographic area. Its resolution in time is, however, limited to the times the satellite or airplane is in the vicinity of the area of interest. Remote sensing data are commonly in the form of a 2D or 3D image. Due to their different modalities, time series and image data cannot be directly fused. Instead, each need to be analyzed to identify patterns and events of interest that can serve as a common base. The resulting patterns can then be fused and form the basis of further data analytics.

Distributed data

The overall application setting is a distributed setting with a built-in hierarchy. The underwater sensor nodes form a distributed system with strong resource constraints in terms of compute-power, communication capabilities and energy. In contrast, the remote sensing data is commonly available at a place with vast compute and communication capabilities. Due to limited communication links, data needs to be aggregated with the network into compact higher-level representations, i.e., patterns [14]. A pattern is a higher-level representation of an event of interest, such as an underwater sensor detecting an earthquake [15]. Patterns can be identified from data of one or more sensors in close proximity. Moreover, communicating compact patterns instead of raw data limits the load on the constrained communication system (see Fig. 1).

Applying AI in underwater sensing, communication and data fusion

Event or pattern recognition may also be applied to data from other sensor types, e.g., cameras [16]. When working with distributed, resource-constraint networks, communication need to be reduced to a bare minimum. This can be done by simply communicating changes in the environment-based predefined parameters. All mentioned techniques can benefit from recent approaches in machine learning, which allow pre-defining neural networks to be implemented on the network nodes itself. Fusion of raw or processed data or ideally of already classified events may be implemented decentralized within the network or on a dedicated network node depending on the requirements of the specific network. To address these challenges, new methodologies to individually analyze each data stream to identify patterns and events of interest need to be devised. Building on neural networks and distributed computing, these methodologies will need to be resource-efficient so that they can be deployed on battery-driven sensor nodes that will be deployed over multiple years on the ocean floor. The patterns and events of interest then serve as a common base and can be fused and form the basis of further data analytics.

Conclusions

There is a demand to extend seafloor monitoring capacities for a safer and a more sustainable usage of the marine environment, as well as for building up a higher resilience against marine hazards. This requires a new generation of cost-efficient and smart seafloor sensor networks, which complement established data streams (e.g., from remote sensing or ship-based surveying) that often have long coverage gaps or are incapable of monitoring the submarine realm at all. Overall, we argue the need for new methodologies for resource-efficient and distributed cross-domain data fusion, that serves as a data-analytics pipeline to derive highly aggregated patterns of interest from raw data. These will serve as (1) a common base in time and space for the fusion of heterogeneous data, and (2) be sufficiently small in size to be transmitted efficiently in resource-constrained settings.

References

Ryabinin V, Barbière J, Haugan P, Kullenberg G, Smith N, McLean C, Rigaud J (2019) The UN decade of ocean science for sustainable development. Front Mar Sci 6:470

Schäfer A, Daniell JE, Skapski JU, Mohr S (2022) CEDIM forensic disaster analysis group (FDA) “volcano & tsunami hunga tonga”. Report No. 1

Grill ST, Tappin DR, Carey S, Watt SF, Ward SN, Grilli AR, Muin M (2019) Modelling of the tsunami from the December 22, 2018 lateral collapse of Anak Krakatau volcano in the Sunda Straits, Indonesia. Sci Rep 9(1):1–13

Furtney MA, Pritchard ME, Biggs J, Carn SA, Ebmeier SK, Jay JA, Reath KA (2018) Synthesizing multi-sensor, multi-satellite, multi-decadal datasets for global volcano monitoring. J Volcanol Geotherm Res 365:38–56

Chaussard E, Amelung F (2012) Precursory inflation of shallow magma reservoirs at west Sunda volcanoes detected by InSAR. Geophys Res Lett. https://doi.org/10.1029/2012GL053817

Walter TR, Haghshenas Haghighi M, Schneider FM, Coppola D, Motagh M, Saul J, Gaebler P (2019) Complex hazard cascade culminating in the Anak Krakatau sector collapse. Nat Commun. https://doi.org/10.1038/s41467-019-12284-5

Luca CD, Valerio E, Giudicepietro F, Macedonio G, Casu F, Lanari R (2021) Pre-and co-eruptive analysis of the September 2021 eruption at cumbre vieja volcano (La Palma, Canary Islands) through DinSAR measurements and analytical modelling. Geophys Res Lett. https://doi.org/10.1029/2021GL097293

Zhang Y, Chen Y, Zhou S, Xu X, Shen X, Wang H (2015) Dynamic node cooperation in an underwater data collection network. IEEE Sensors J 16(11):4127–4136

IPCC, 2018: Global Warming of 1.5 °C. An IPCC Special Report on the impacts of global warming of 1.5 °C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change, sustainable development, and efforts to eradicate poverty [Masson-Delmotte, V., P. Zhai, H.-O. Pörtner, D. Roberts, J. Skea, P.R. Shukla, A. Pirani, W. Moufouma-Okia, C. Péan, R. Pidcock, S. Connors, J.B.R. Matthews, Y. Chen, X. Zhou, M.I. Gomis, E. Lonnoy, T. Maycock, M. Tignor, and T. Waterfield (eds.)]

Halland EK (2019) Offshore storage of CO2 in Norway. Geophys Geosequestration. https://doi.org/10.1017/9781316480724

Chadwick RA, Zweigel P, Gregersen U, Kirby GA, Holloway S, Johannessen PN (2004) Geological reservoir characterization of a CO2 storage site: the Utsira Sand, Sleipner, Northern North Sea. Energy 29(9–10):1371–1381

Bohloli B, Bateson L, Berndt C, Bjørnarå TI, Eiken O, Estublier A, Frauenfelder R, Karstens J, Orio RM, Meckel T, Mondol NH, Park J, Soroush A, Soulat A, Sparrevik PM, Vincent C, Vöge M, Waarum IK, White J, Xue Z, Zarifi Z, Gutiérrez IÁ, Vidal JAM (2021) Assuring integrity of CO2 storage sites through ground surface monitoring (SENSE). Open access [paper]. In: 15. International conference on greenhouse gas control technologies, GHGT-15 Abu Dhabi, 15.–18.03.2021. https://doi.org/10.2139/ssrn.3818971

Renner BC, Heitmann J, Steinmetz F (2020) AHOI: Inexpensive, low-power communication and localization for underwater sensor networks and μAUVs. Acm Trans Sens Networks (tosn) 16(2):1–46

Poirot V, Landsiedel O (2021) Dimmer: self-adaptive network-wide flooding with reinforcement learning. In: 2021 IEEE 41st international conference on distributed computing systems (ICDCS). IEEE, pp 293–303

Profentzas C, Almgren M, Landsiedel O (2021) Performance of deep neural networks on low-power IoT devices. In: Proceedings of the workshop on benchmarking cyber-physical systems and Internet of things, pp 32–37

Li J, Chen T, Yang Z, Chen L, Liu P, Zhang Y, Sun X (2021) Development of a buoy-borne underwater imaging system for in situ mesoplankton monitoring of coastal waters. IEEE J Ocean Eng 47(1):88–110

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zainab, T., Karstens, J. & Landsiedel, O. Cross-domain fusion in smart seafloor sensor networks. Informatik Spektrum 45, 290–294 (2022). https://doi.org/10.1007/s00287-022-01486-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00287-022-01486-9